Hi Readers, this is a very common scenario in integration where you need an API to transform xml to json or json to xml. I have also faced this in my recent project where all our developers needed a common API to do so. So, I came up with an idea whereby using API Management policy we can build such API in no time. Since our team was already using API management to share and host their APIs, this little xml <-> json parser was easy to share across.

|

Scenario |

Json to Xml |

Xml To Json |

|

Http Method |

Post |

Post |

|

Headers |

Content-Type: application/json sourceformat: json destinationformat: xml Ocp-Apim-Subscription-Key: yoursubscriptionkey |

Content-Type: application/json sourceformat: json destinationformat: xml Ocp-Apim-Subscription-Key: yoursubscriptionkey |

|

Body |

{ “hello”: “world” } |

<Document> <hello>world</hello> </Document> |

|

Expected Output |

<Document> <hello>world</hello> </Document> |

{ “hello”: “world” } |

So, we have built an API called Format Conversion, which is will take sourceformat and targetformat as an input, along with the body of the request and do the conversion.

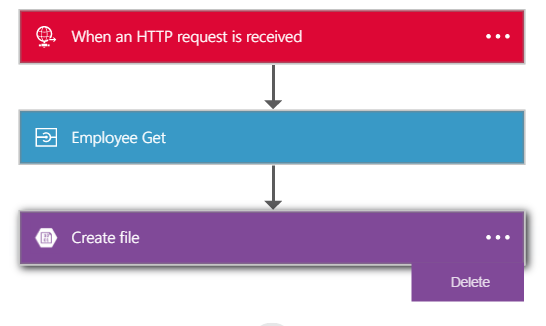

Now, add an operation in an API in api management with name FormatConversion like below:

We will add the following policy under Inbound Processing section of the API operation in API Management.

<policies>

<inbound>

<base />

<choose>

<when condition="@(context.Request.Headers["sourceformat"][0].ToLower()=="xml" && context.Request.Headers["destinationformat"][0].ToLower()=="json")">

<xml-to-json kind="direct" apply="always" consider-accept-header="false" />

<return-response>

<set-status code="200" />

<set-header name="Content-Type" exists-action="override">

<value>application/json</value>

</set-header>

<set-body>@(context.Request.Body.As<string>())</set-body>

</return-response>

</when>

<when condition="@(context.Request.Headers["sourceformat"][0].ToLower()=="json" && context.Request.Headers["destinationformat"][0].ToLower()=="xml")">

<json-to-xml apply="always" consider-accept-header="false" parse-date="false" />

<return-response>

<set-status code="200" />

<set-header name="Content-Type" exists-action="override">

<value>text/xml</value>

</set-header>

<set-body>@(context.Request.Body.As<string>())</set-body>

</return-response>

</when>

<otherwise>

<return-response>

<set-status code="400" />

<set-header name="Content-Type" exists-action="override">

<value>application/json</value>

</set-header>

<set-body template="liquid">

{

"message":"Invalid request parameters."

}

</set-body>

</return-response>

</otherwise>

</choose>

</inbound>

<backend>

<base />

</backend>

<outbound>

</outbound>

<on-error>

<base />

</on-error>

</policies>

Now, save the policy and test it. If you are struggling with anything in this, post a comment and I will reply as soon as I can.

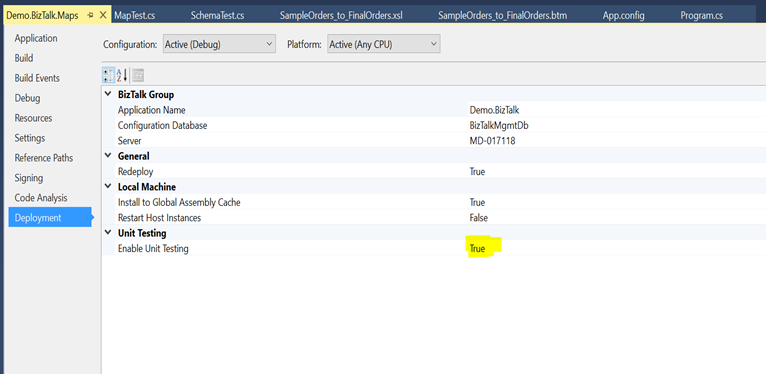

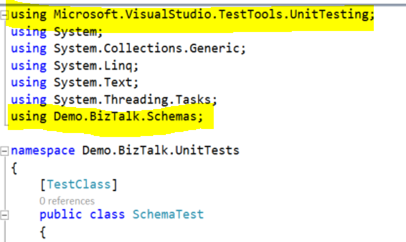

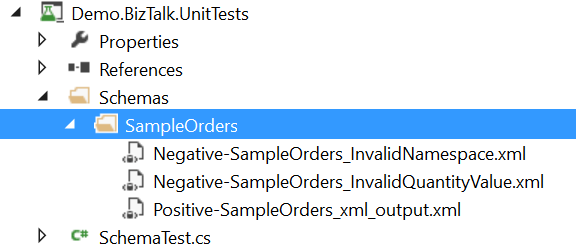

Now build the solution.

Now build the solution.

Now build the solution.

Now build the solution.